Google Cloud Platform Technology Nuggets - November 16-30, 2024 Edition

Welcome to the November 16–30, 2024 edition of Google Cloud Platform Technology Nuggets.

The nuggets are also available in the form of a Podcast. Subscribe to it today.

This edition is a bit light and quiet on the announcements. There were not too many blog posts published at the official site due to the Thanksgiving Holidays.

Containers and Kubernetes

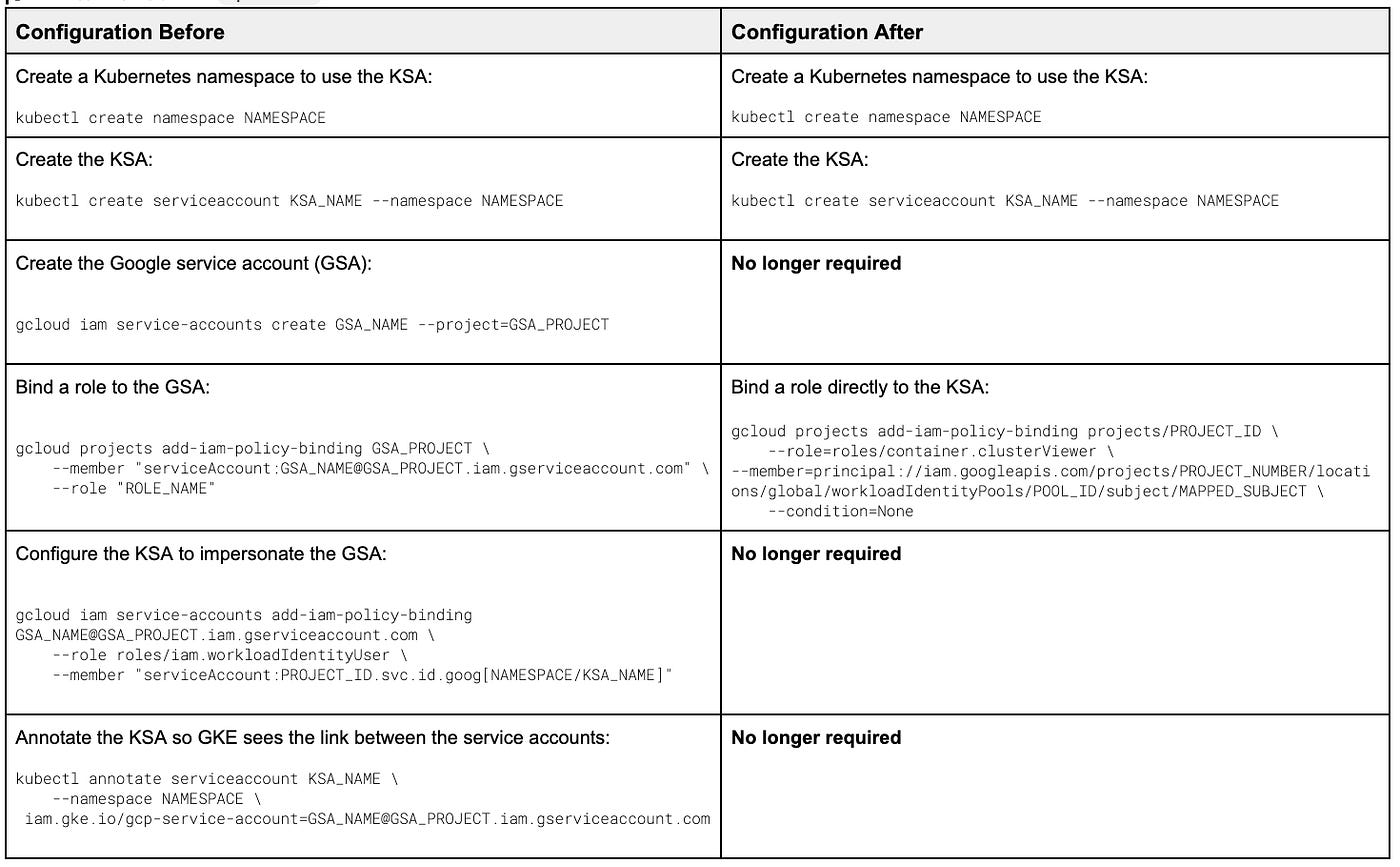

For GKE workloads, GKE Workload Identity is the recommended way to securely authenticate to Google Cloud APIs and services. A couple of things to note: GKE Workload Identity was renamed earlier this year to Workload Identity Federation for GKE and more importantly, this has been now made easier to use with a closer integration with Google Cloud’s IAM (Identity Access Management). The simplicity comes in since Google Cloud IAM policies can directly reference GKE workloads and Kubernetes service accounts and thereby reducing the steps as the table below indicates.

Check out the blog post for more details.

Identity and Security

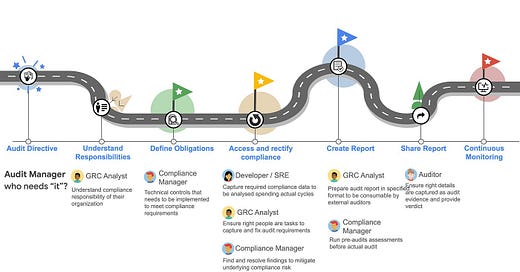

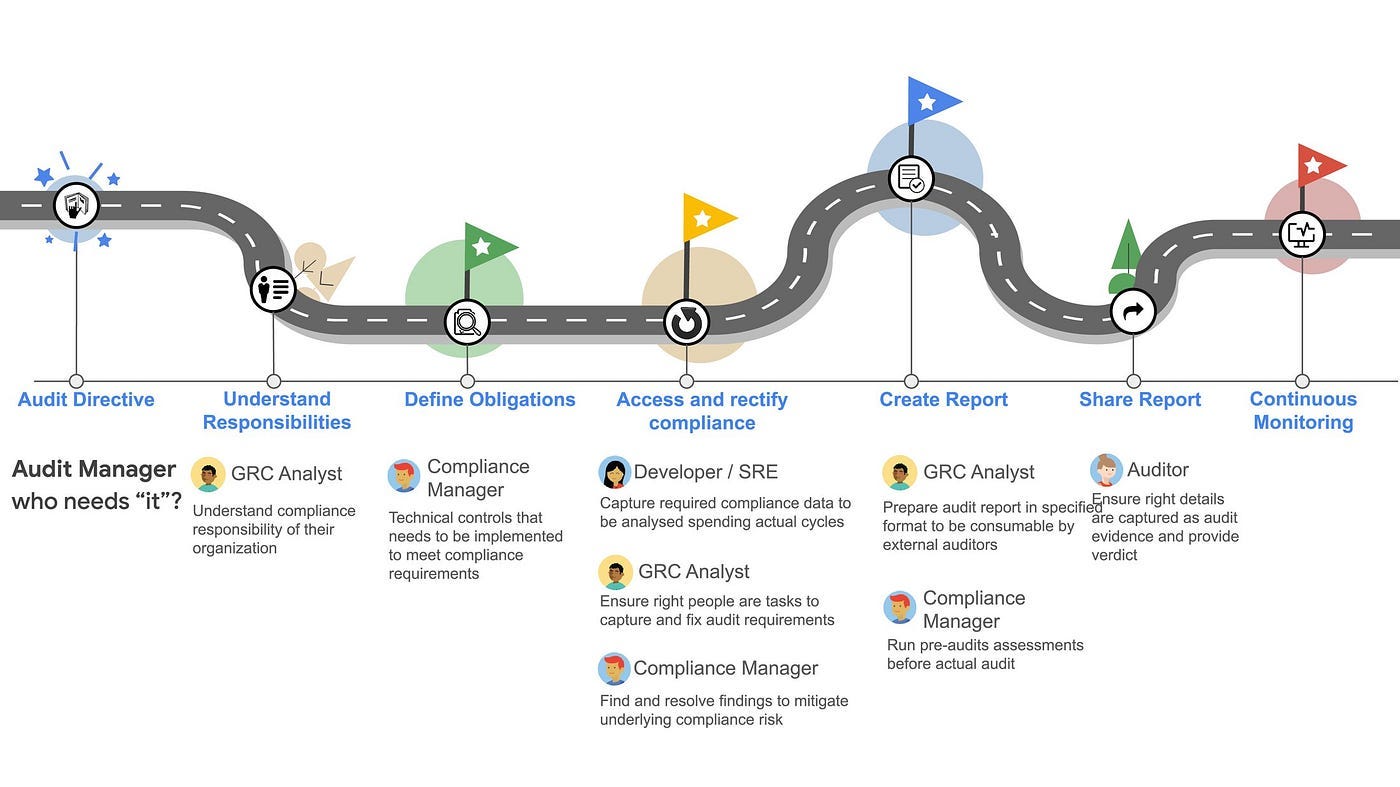

The Audit Manager service in Google Cloud is now in General Availability (GA). As per the blog post, a cloud compliance audit process involves defining responsibilities, identifying and mitigating risks, collecting supporting data, and generating a final report. Audit Manager is there to help you manage this process. Check out the blog post for more details.

The Audit Manager service is available from Google Cloud Console via the Compliance tab.

The 2nd CISO Perspectives for November 2024 is out. There is an interesting discussion around whether cyber-insurance provided to companies to fight ransomware attacks/demands is a good approach or outrightly eliminating such schemes. Interestingly, the firms providing cyber-insurance could help the organizations strengthen their security posture with putting forward minimum security requirements in place to even qualify for the insurance. Check out the blog post for more details and other related security news.

Machine Learning

Cloud Translation API has got significant updates. Key ones include:

Support for 189 languages

Ability to choose a model depending on your requirement. Use the NMT model for translating general text or choose Adaptive Translation for customization in real-time.

Check out the blog post to understand the two tiers in which Cloud Translation API is available along with the new features.

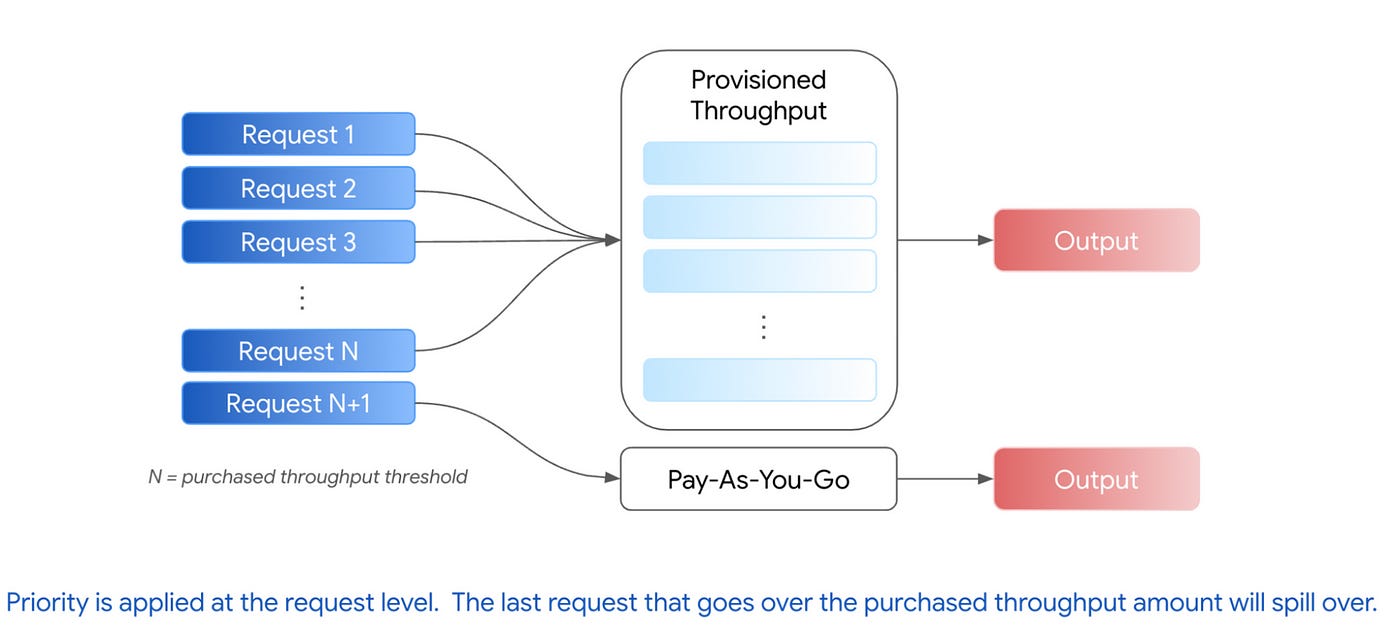

What does Error Code 429 mean when working with LLMs in Google Cloud? If the number of your requests exceeds the capacity allocated to process requests, then error code 429 is returned. Given the current state of capacity for running AI workloads and if you start facing this error in your application, check out this blog post that highlights scenarios in which you hit these errors, along with the best practices to address this, to retain the quality experience that you want your users to have. Some of these best practices range from using exponential backoff to a specific Vertex AI feature, Provisioned Throughput (reserve dedicated capacity for generative AI models on the Vertex AI platform).

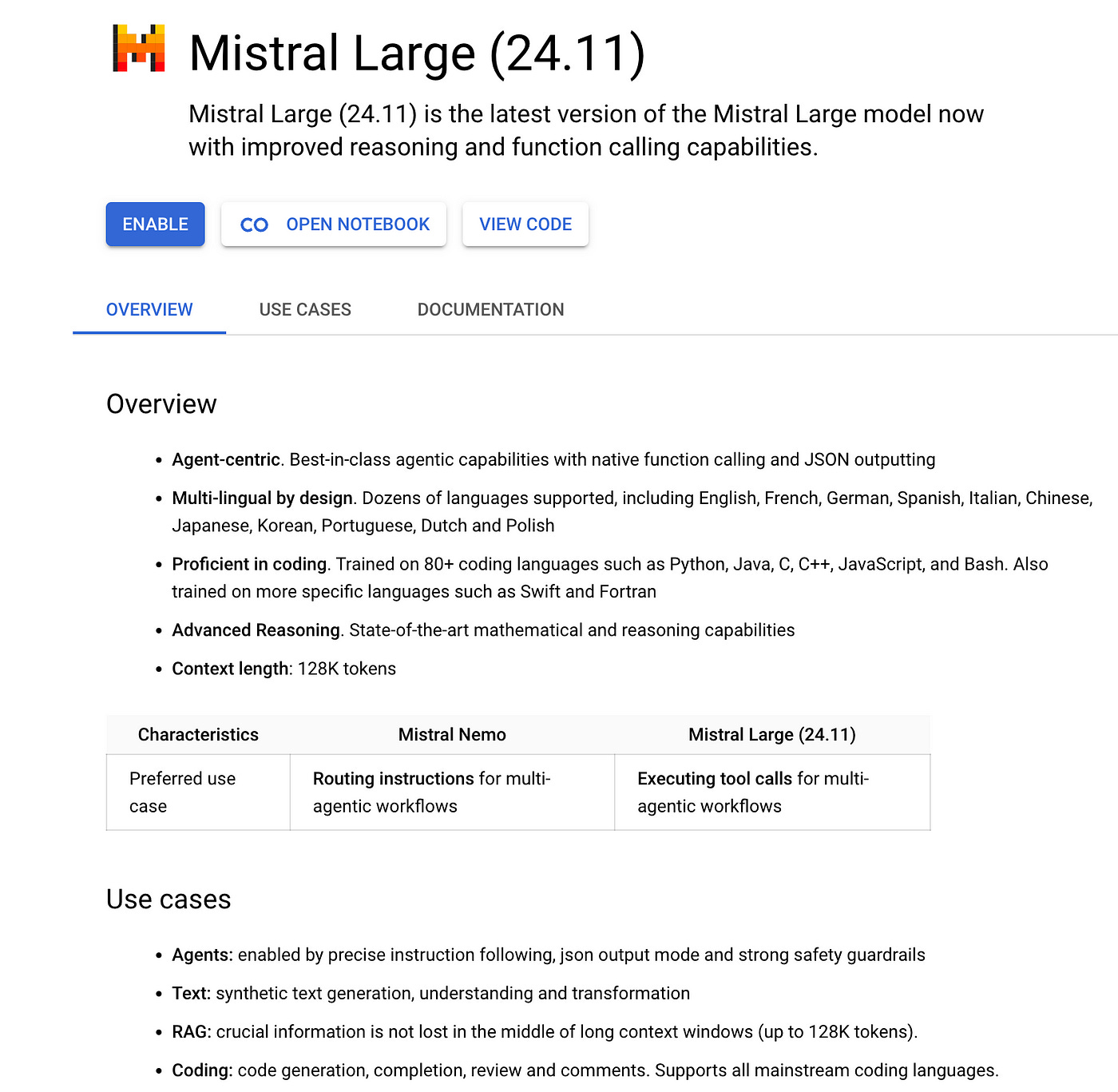

Mistral AI’s newest model on Vertex AI Model Garden: Mistral-Large-Instruct-2411 is now generally available. As the blog post states, “advanced dense large language model (LLM) of 123B parameters with strong reasoning, knowledge and coding capabilities extending its predecessor with better long context, function calling and system prompt.” Do check out the post to understand the use cases (complex agentic workflows, code generation and large-context applications) that this model is best suited for and a quick way via Model Garden to start evaluating/using this model.

Infrastructure

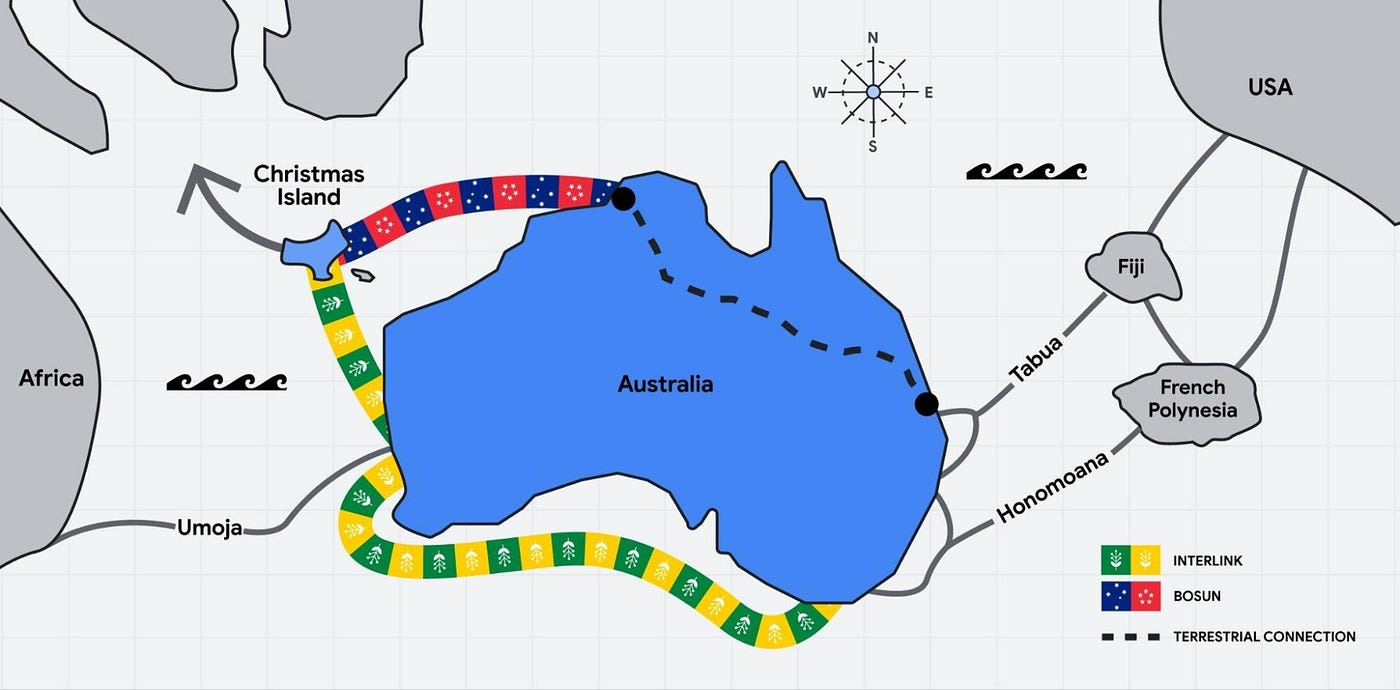

Last year, we mentioned about the South Pacific Connect initiative will create a ring between Australia, Fiji and French Polynesia. It continues to see momentum, with the announcement of the Australia Connect initiative, and specifically the the Bosun subsea cable will connect Darwin, Australia to Christmas Island, which has onward connectivity to Singapore. These infrastructure investments are sure to bring in all round connectivity and directly improve on the digital infrastructure with more services. Check out the blog post for more details.

High Performance Computing

If you have not been following some of the developments of Google Cloud vis-a-vis its High Performance Computing (HPC) services, then this is the time to update on some of that. In a blog post that is titled “What’s new with HPC and AI infrastructure at Google Cloud”, the post goes into several of the infrastructure that is available like the H-Series VMs, ParralelStore — which is a fully managed, scalable, high-performance storage solution based on next-generation DAOS technology, Trillium TPUs and more.

They have also announced, Google Cloud Advanced Computing Community, a new kind of community of practice for sharing and growing HPC, AI, and quantum computing expertise, innovation, and impact. Sign up here, if this is of interest.,

Databases

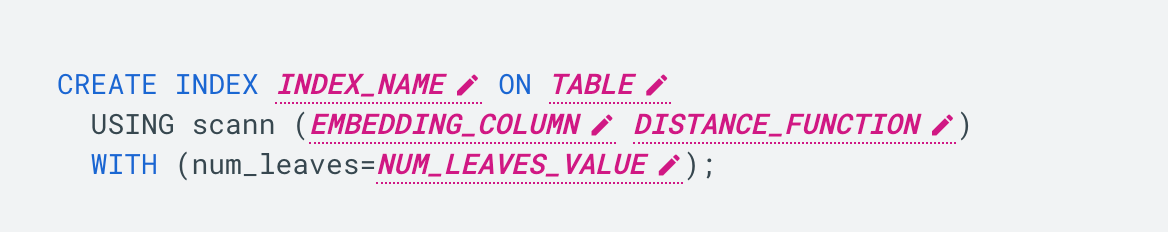

AlloyDB Omni has a new release (15.7.0) and this release brings in signficant performance improvements vis-a-vis performance gains, ultra-fast disk cache, availablilty of SCaNN index in GA and more. Check out the blog post for more details.

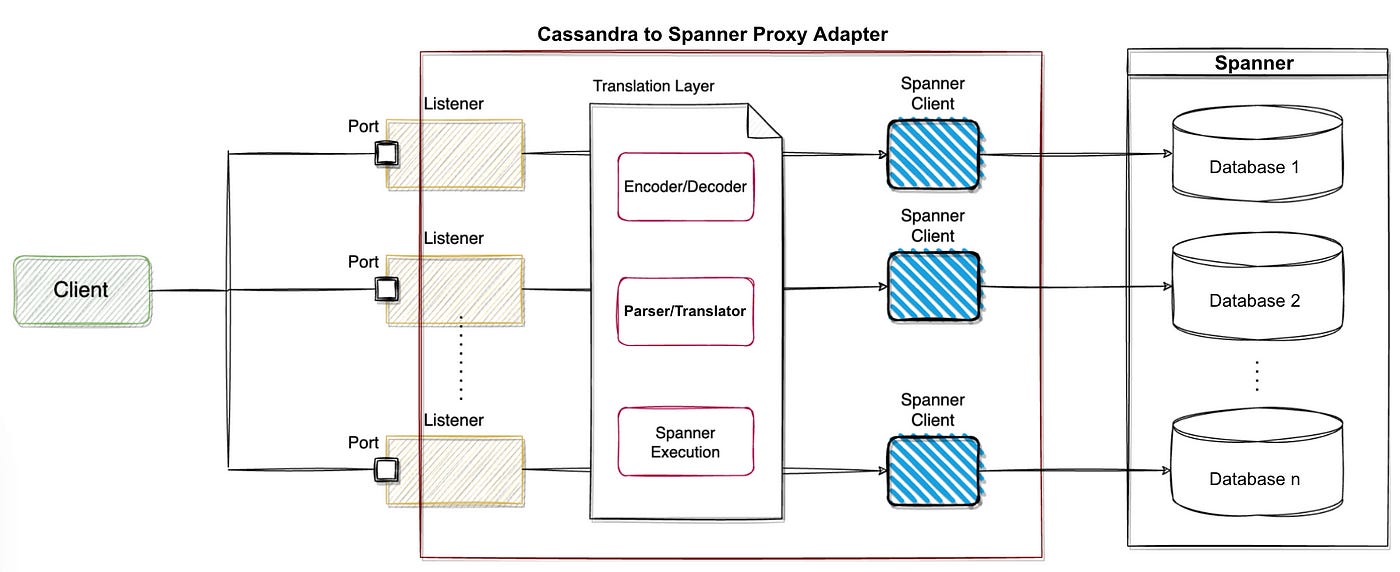

Looking to migrate from Cassandra to Spanner? Google Cloud has announced a Cassandra to Spanner Proxy Adapter, an open-source tool for plug-and-play migrations of Cassandra workloads to Spanner, without any changes to the application logic. Check out this blog post that highlights key features of Spanner, Yahoo’s migration journey from Cassandra to Spanner and what’s under the hood of this adapter.

Data Analytics

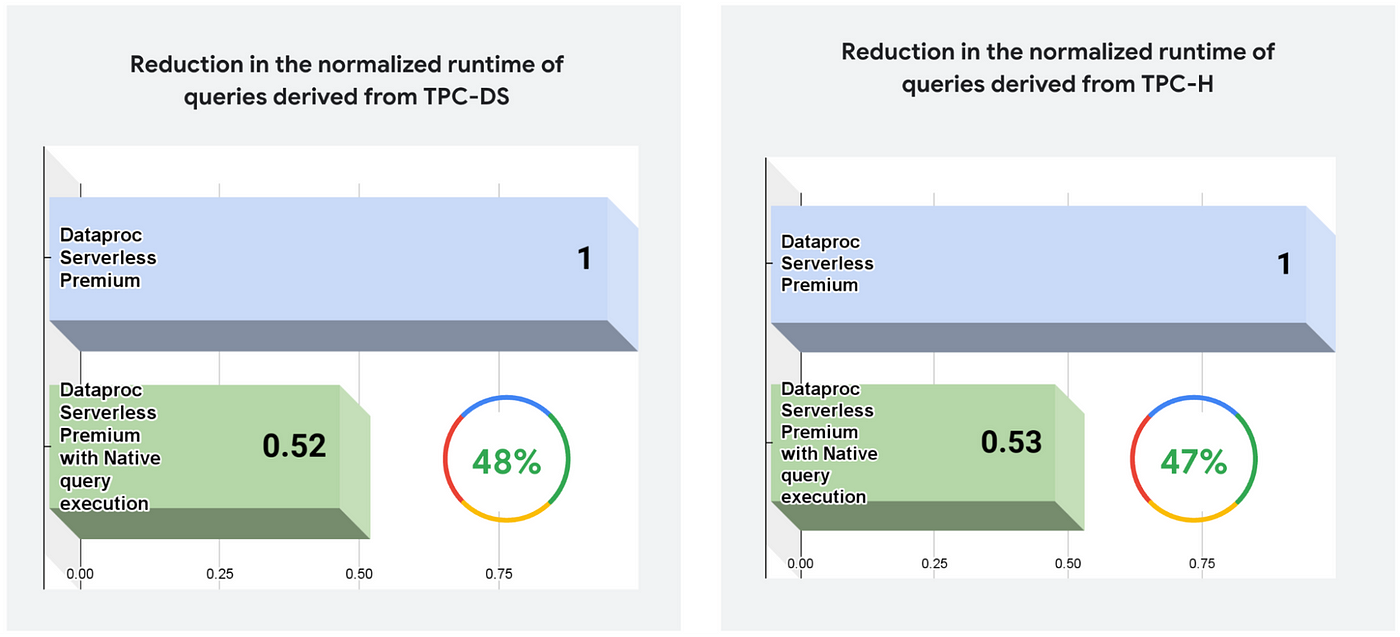

Dataproc Serverless allows you to run Spark batch workloads without provisioning and managing your own cluster. It packs a punch now with several new features that are geared towards making it faster, easier and intelligent. The faster part is the new native query execution, available in the Premium tier, to see speed improvements for your Spark batch jobs. The easier part is a fully managed Spark UI in Dataproc Serverless that is available for every batch job and session in both Standard and Premium tiers of Dataproc Serverless at no additional cost. You submit your job and with Spark UI, you can analyze the performance in real time. The intelligent part is the integration with Gemini, which can automatically fine-tune the Spark configurations of your Dataproc Serverless batch jobs for optimal performance and reliability, along with any help that you may need in troubleshooting your slow or failed jobs. Check out the blog post for more details.

Customer Stories

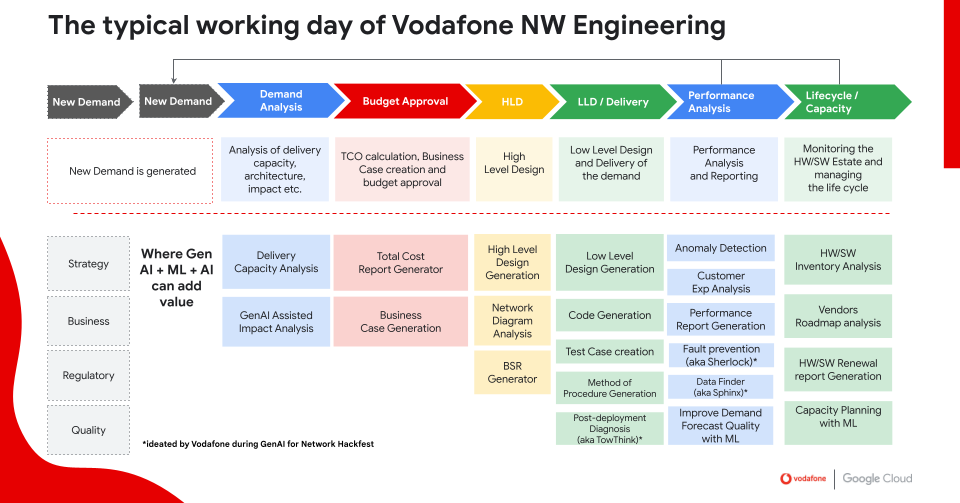

Vodafone needs no introduction and in the same way, Generative AI needs no introduction too. How does a company like Vodafone plan to use Generative AI. Are there specific use cases that it has identified that could be a good candidate to start exploring the use of Generative AI within that. Check out this blog post, more to understand the development of 13 demo use cases powered by a mix of Vertex AI Search & Conversation, Gemini 1.5 Pro, code generation model, and traditional ML algorithms. These use cases included site assessments, searching for relevant data, diagnose network issues, predict potential outages, and assist with configuration tasks.

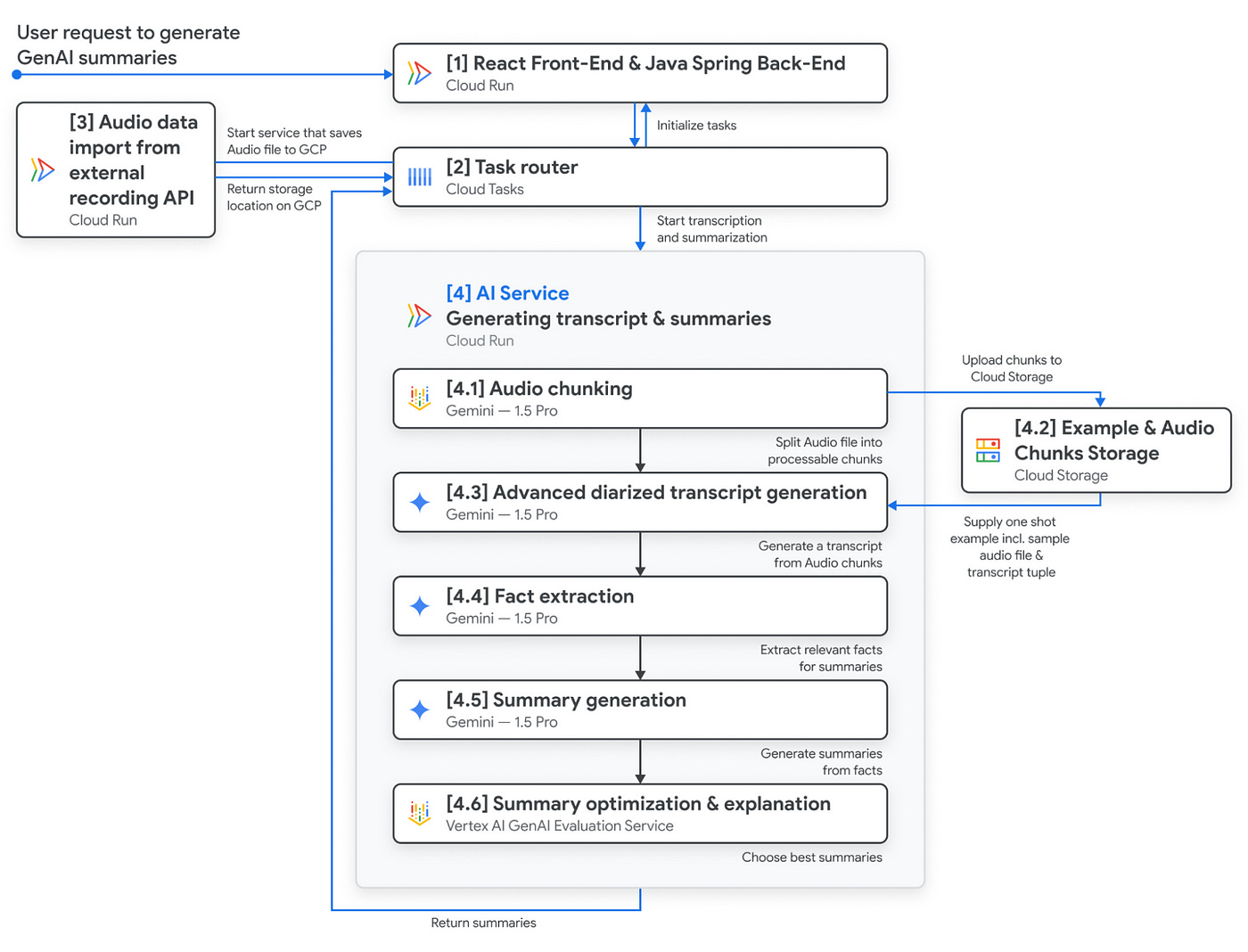

Let’s switch now to the Commerzbanks’ financial advisory division for corporate clients. The process usually involves not just understanding the specific client needs but sifting through documents, recordings, reports and with a regulatory compliance angle in the background. The solution is a Gen AI system built on top of Vertex AI that handles multiple modalities. Check out the blog post for more details.

Developers and Practitioners

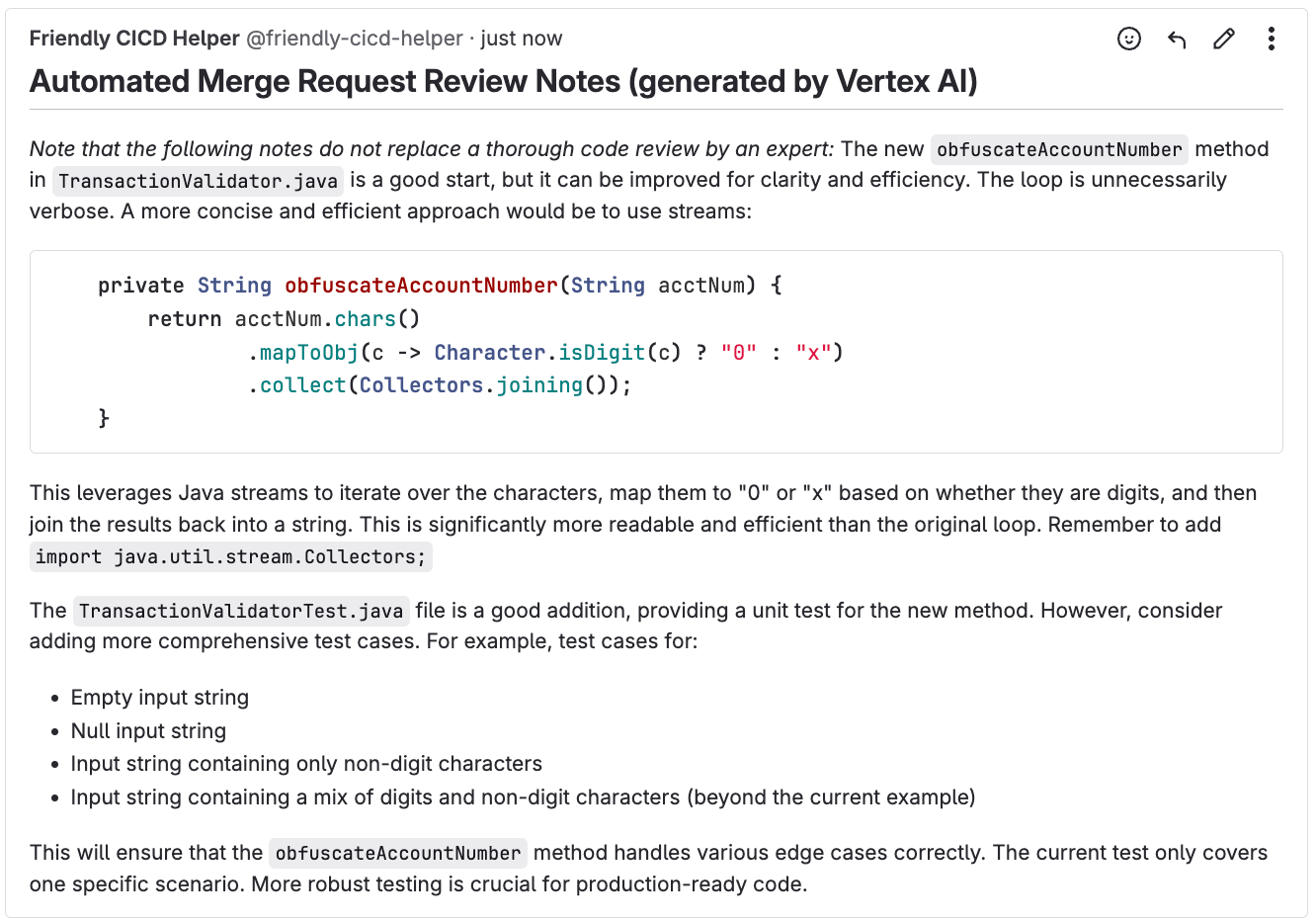

Coding Assistants in the IDEs have been one of the top use cases of Generative AI and organizations have seen significant productivity gains with it. It is natural that writing code is just one part of the entire Software Development Lifecycle (SDLC) and that includes among other tasks, things like pull-requests, code reviews, or generating release notes. How can Gemini be leverages to help out in these tasks and integrate well with Google Cloud services like Cloud Build? Check out the blog post to learn more.

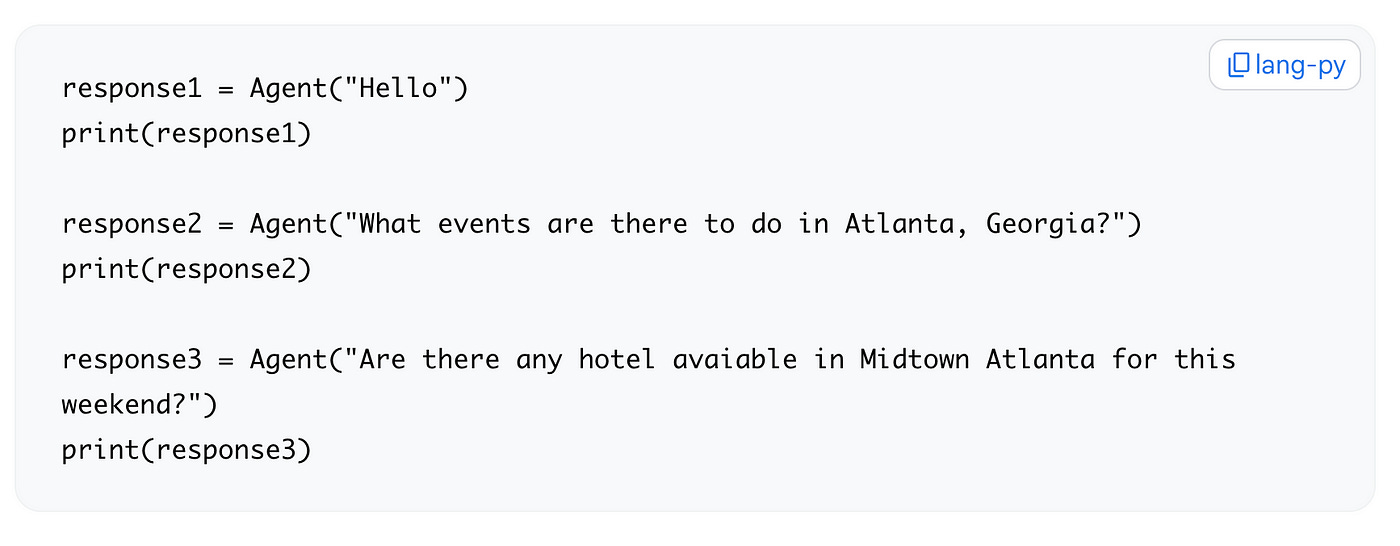

We’ve seen demos of Trip Planning Agents. But how do you build one? How do you create this Agent that can connect to external data, access and integrate real-time information and generate personalized recommendations? In Generative AI terminology, that is going to make use of Function Calling (Tools) and Grounding your responses in real-world data. Check out this blog post that covers both these concepts and builds out an agent via a Notebook that you can run yourselves.

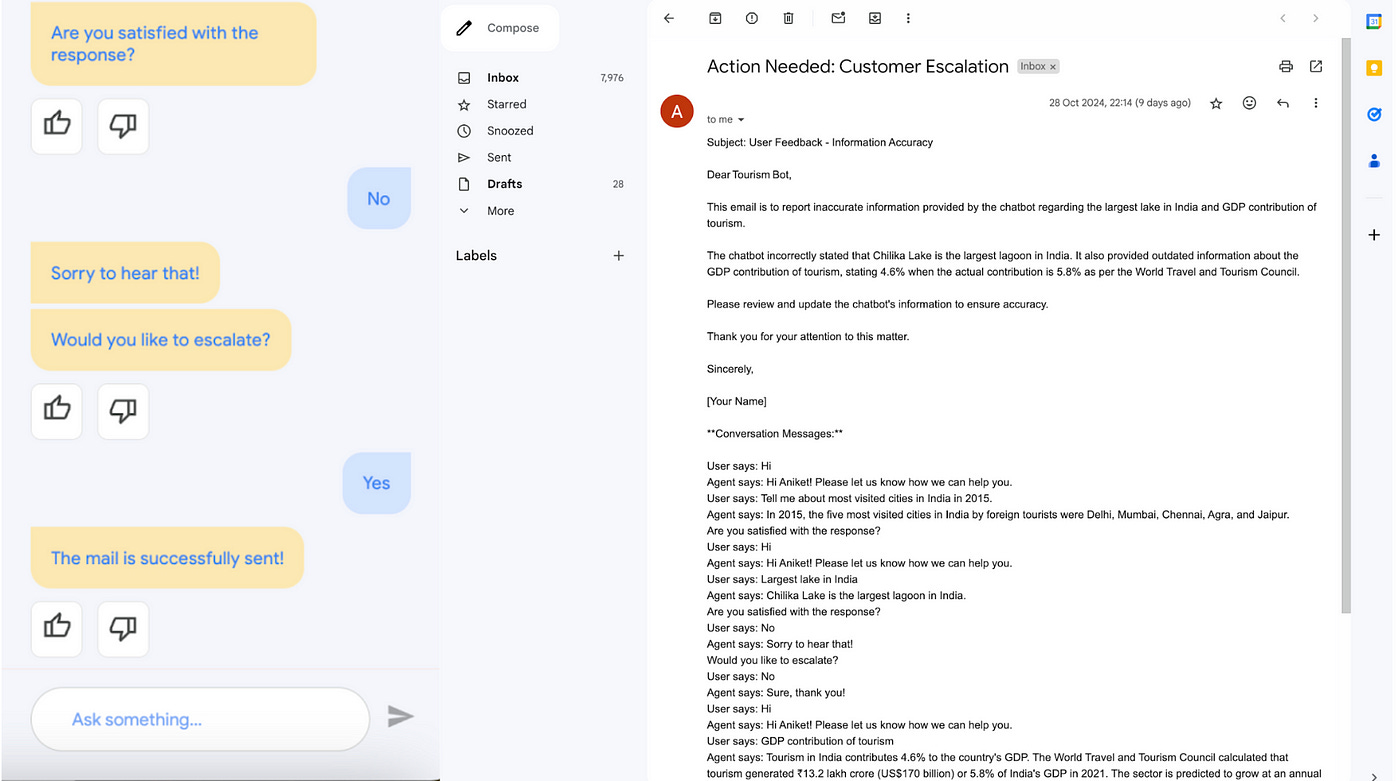

We have another Agent development guide that makes use of Vertex AI Agent Builder to build out an Agent that asks for a feedback from the user. If the user is not satisfied with the process, the conversation is marked for escalation to the manager or the next level and an email gets sent. Check out how various tools like Vertex AI Agent Builder, Cloud Functions, etc come together to create a “Create a self-escalating chatbot in Conversational Agents using Webhook and Generators”.

Stay in Touch

Have questions, comments, or other feedback on this newsletter? Please send Feedback.

If any of your peers are interested in receiving this newsletter, send them the Subscribe link.