Google Cloud Platform Technology Nuggets - January 16-31, 2025 Edition

Welcome to the January 16–31, 2025 edition of Google Cloud Platform Technology Nuggets. The nuggets are also available in the form of a Podcast.

Infrastructure

This edition of the newsletter is heavy in Compute updates. Here we go.

C4A is a VM instance family that is based on the Google Axion Processors. The C4A virtual machines with Titanium SSDs, custom designed by Google for cloud workloads that require real-time data processing, with low-latency and high-throughput storage performance, are now available in General Availability (GA). These VMs are available via on-demand, Spot VMs, reservations, committed use discounts (CUDs), and FlexCUDs. Check out the blog post to note the regions in which it is currently available.

AI Hypercomputer, which is the umbrella term for AI optimized hardware, software, and consumption, combined to improve productivity and efficiency, has seen significant updates in the last quarter of 2024. Here is a blog post that summarizes the updates with a focus on which workloads can best benefit from it. The one that catches attention is the ability to try out Cloud TPUs (TPU v5e) in Colab.

The A3 VM series is optimized for compute and memory intensive, network bound ML training, and HPC workloads. But with 8 GPUs, it turns out not so cost-effective for all organizations, since their workloads will vary. To cater to the different demand and to introduce flexibility, think 1,2,4 and 8 with A3 VMs. In summary, the A3 High VMs powered by NVIDIA H100 80GB GPUs in multiple generally available machine types of 1,2,4 and 8 GPUs. Check out the blog post and the table below for a quick summary. Note that these new VMs are available via fully managed Vertex AI, as nodes through Google Kubernetes Engine (GKE), and as VMs through Google Compute Engine and also as Spot VMs and through Dynamic Workload Scheduler (DWS) Flex Start mode.

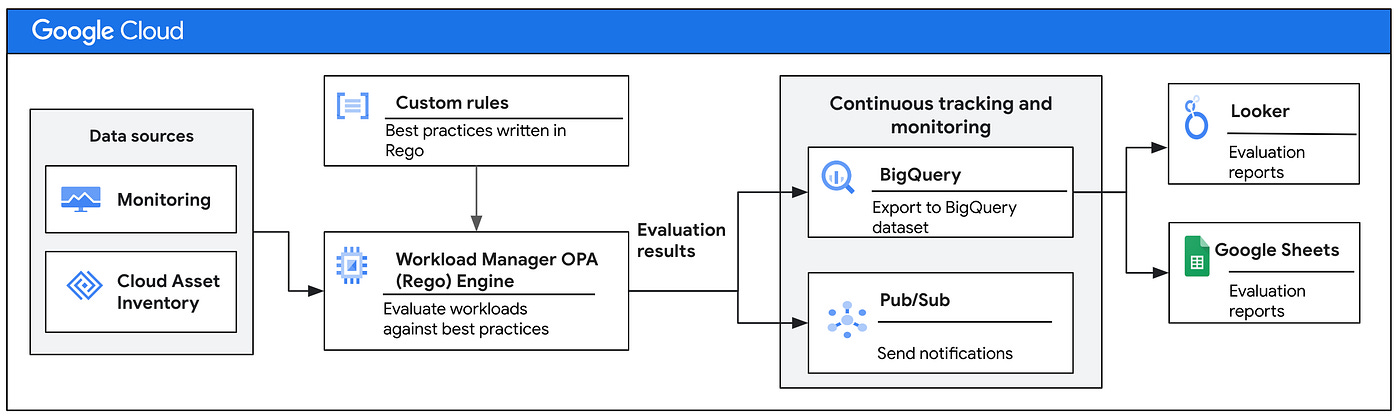

Workload Manager is a rule-based validation service for evaluating your workloads running on Google Cloud. Workload Manager scans your workloads to detect deviations from standards, rules, and best practices to improve system quality, reliability, and performance. This services now supports Custom Rules, to help validate your workloads against your organizations best practices.

Some examples of custom rules could be “Do not use Compute Engine default service”, “at least one label for your VMs”, “VMs in your workload don’t use an external IP address” and so on. The Workload Manager tracks and monitors your workloads against these custom rules, detects the drift / exceptions and then you are provided reports to look at. Check out the blog post for more details.

Machine Learning

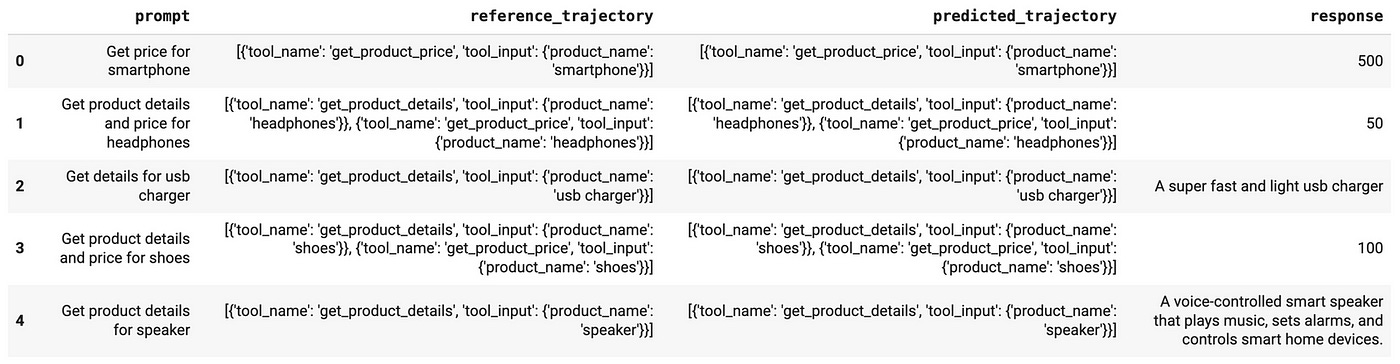

Vertex AI Gen AI evaluation service is now in public preview. It helps you evaluate any generative model or application and benchmark the evaluation results against your own judgment, using your own evaluation criteria. The way to think of evaluation with this service is to look at both the responses that it generates and sequence of actions (trajectory) that it takes to reach those responses. A sample evaluation dataset is presented below:

You then define the metrics that you want to use to evaluate the agent. Check out the blog post on the details on this services, how to get started and sample notebooks.

Identity and Security

We have two CISO bulletins for the month. The first one is a nice explanation that when we talk about cybersecurity in an organization, we should relate it to the business impact that some of these breaches and attacks can have on the organization. Key among those impacts are: Financial losses, Reputational damage, Legal and regulatory fallout and operational disruption. This definitely makes more sense. The second CISO bulletin talks about the state of ransomware in the cloud. The material is taken from the latest edition of Google Cloud Threat Horizons Report provides decision-makers with strategic intelligence on threats to not just Google Cloud, but all providers.

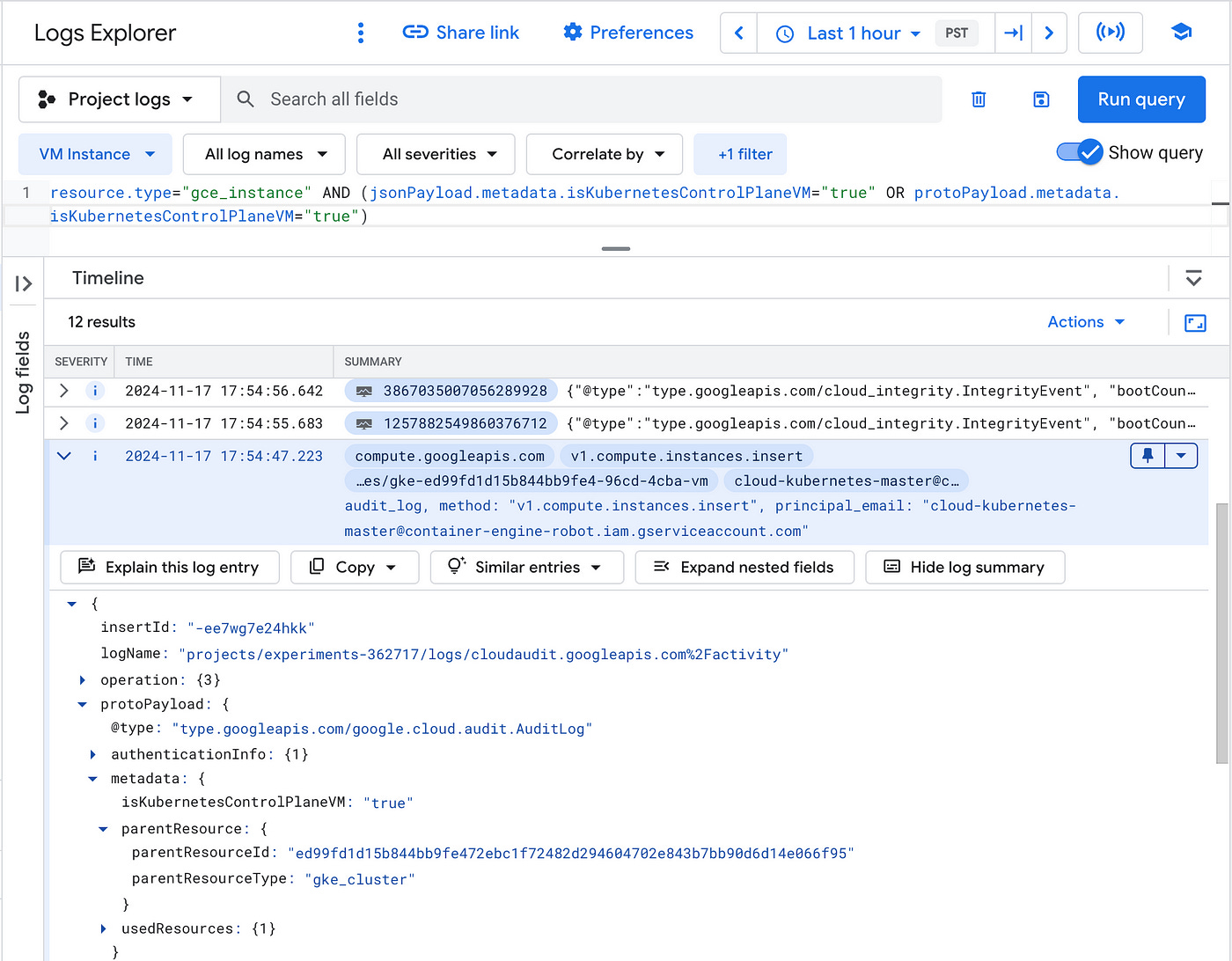

What is a Software Supply Chain? As the definition goes “A software supply chain is the process and components used to develop, build, and publish software. It includes the code, tools, libraries, and configurations used to create a software artifact.” In summary, the origin of the software components you deploy is crucial for mitigating risks and ensuring the trustworthiness of your applications. You can now verify the integrity of Google Kubernetes Engine components with SLSA, the Supply-chain Levels for Software Artifacts framework. Read more about it in the detailed blog post that highlights:

Google Compute Engine (GCE) audit logs to include VM image identifiers.

New GKE clusters running version 1.29 or later now forward their Control Plane GCE audit logs records for insert, bulk insert, and update operations, and their Shielded VM integrity logs, to the customer project hosting the GKE cluster.

GKE is publishing SLSA Verification Summary Attestations (VSAs) for GKE Container Optimized OS (COS) based VM images.

You verify the authenticity of GKE VM images using the open-source slsa-verifier tool.

One of the key areas of Confidential Computing is about projects securing data in use and particularly protecting sensitive data. A few Confidential Computing updates in Google Cloud, that help towards that include:

Confidential GKE Nodes enforce data encryption in-use in your Google Kubernetes Engine (GKE) nodes and workloads. This feature is now expanded to C3D machine series with AMD SEV in GKE Standard mode, in addition to the already supported VM series (2nd and 3rd Gen AMD EPYC™ processors: the general-purpose N2D machine series and the compute-optimized C2D machine series).

Confidential GKE Nodes on GKE Autopilot mode is now generally available.

Check out the blog post for more details and additional features.

Customer Stories

What does a end-end MLOps platform look like on Google Cloud platform? L’oreal, the world’s largest cosmetics company, led the efforts to build this via its Tech Accelerator program. Labelled data preparation to training, prediction, deployment pipelines were all mapped out and built. Check out the blog post for more details on each of these building blocks.

Containers and Kubernetes

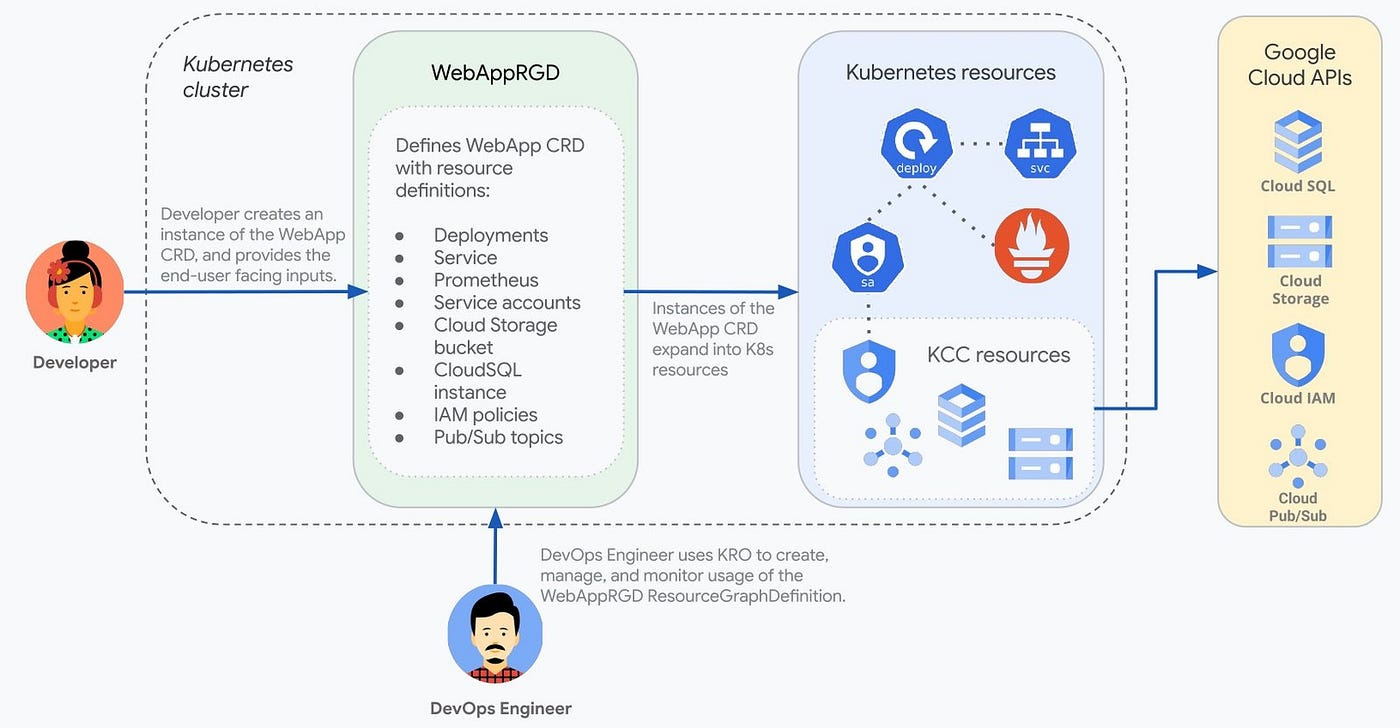

Open source often can bring together companies that you never thought could work together. Google Cloud, AWS, and Azure have come together to release Kube Resource Orchestrator, or kro (pronounced “crow”). As the blog post states “this is a cloud-agnostic way to define groupings of Kubernetes resources. With kro, you can group your applications and their dependencies as a single resource that can be easily consumed by end users.” You can encapsulate a Kubernetes deployment and its dependencies into a single API. An example below shows the definition of the WebApp Resource Graph definition (WebAppRGD) that defines all the necessary resources for a web application environment, including Deployments, service, service accounts, monitoring agents, and cloud resources like object storage buckets. The Developer, could create an instance of the WebApp CRD, inputting any user-facing parameters. kro then deploys the desired Kubernetes resource.

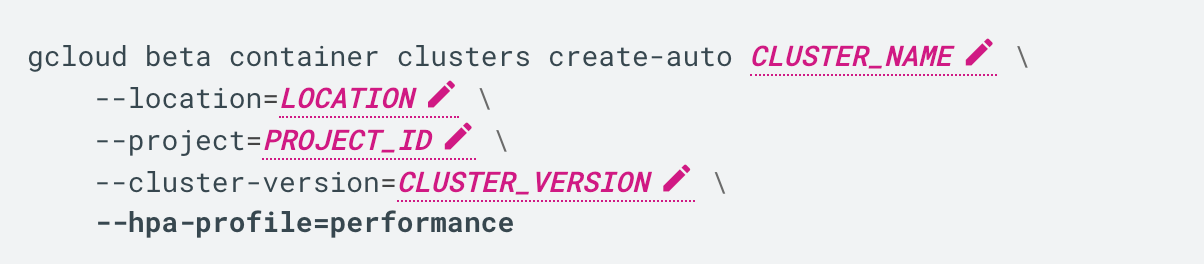

Horizontal Pod Autoscaler (HPA) feature in GKE has been completely rearchitected, providing higher scaling, a fast metrics path and consistent and predictable scaling up to 1000 HPA objects. An HPA object A HPA object is a configuration within Kubernetes that defines how a specific deployment or replica set should be scaled by HPA. Each HPA object monitors a particular set of pods. Check out the blog post for more details.

Who doesn’t want flexibility and easier configuration? GKE control-plane access has been decoupled from node-pool IP configuration. In simpler words, a flexible way to configure and operate the connectivity to the GKE control plane and nodes.

Each area therefore can be individually controlled with configuration and policies that are best suited to your deployment. Check out this blog post that highlights the previous challenges and how they are now addressed by these changes.

Databases

Spanner Graph is now generally available. This service provides a unified database that integrates graph, relational, search, and gen AI capabilities, coupled with scale that Spanner is known for. In addition to the GA feature, there is the new open-source Spanner Graph Notebook tool provides an efficient way to query Spanner Graph visually, Langchain integration, Spanner Graph performance improvements and partner integrations. Check out the detailed blog post.

Its been a while since the Oracle and Google Cloud partnership was announced, that allowed migrating Oracle databases and applications to Google Cloud. But what are the migration paths for the databases as well as applications? This blog post highlights the migration options for Oracle database, Containerized Oracle applications, boosting performance of various Java-based Oracle applications and more.

Data Analytics

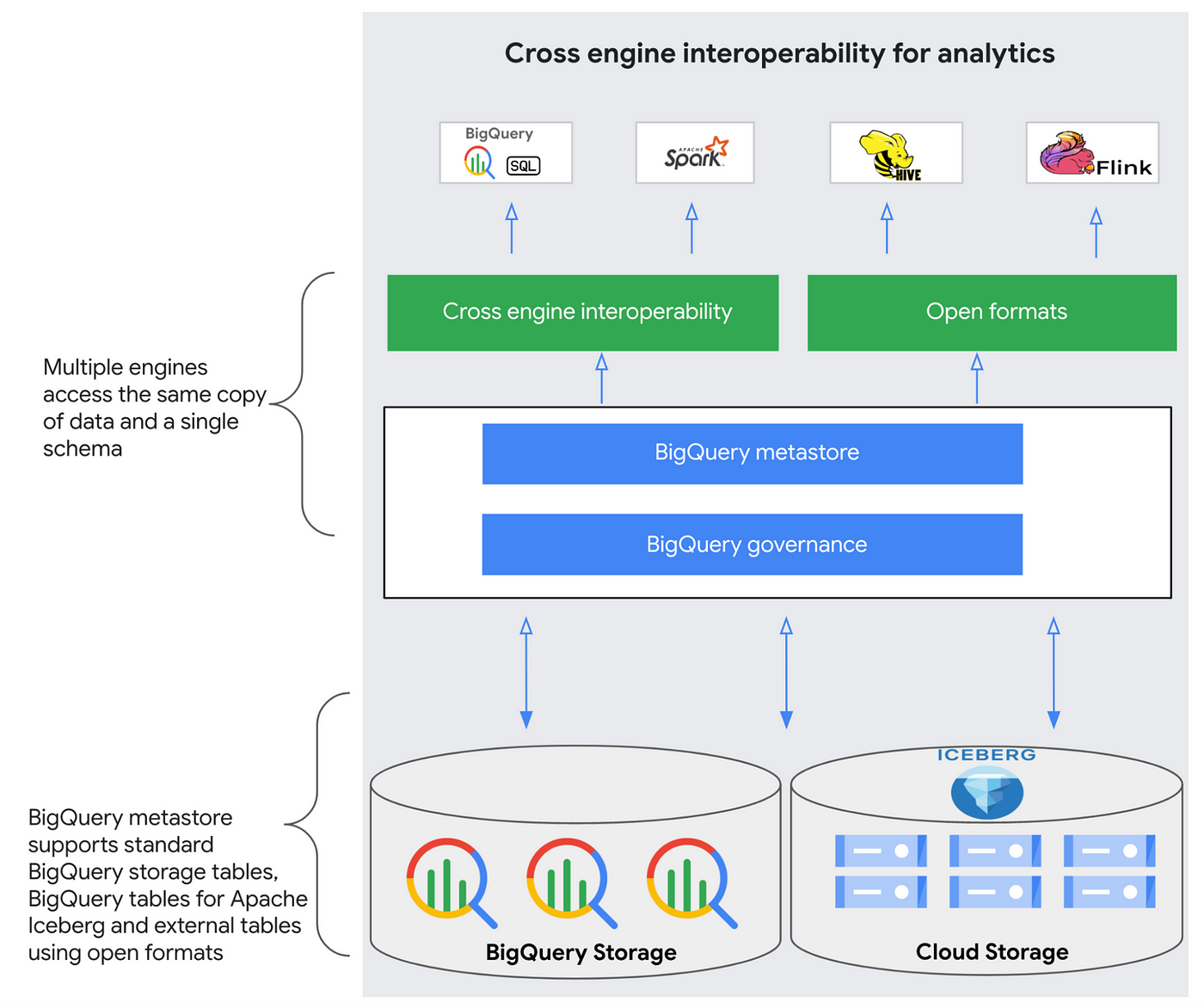

BigQuery metastore, the fully managed metastore for data analytics products on Google Cloud, which provides a single source of truth for managing metadata from multiple sources is now available in public preview. It supports open data formats such as Apache Iceberg that are accessible by a variety of processing engines, including BigQuery, Spark, Flink and Hive. Think of this as a single unified service that provides metadata that can be used by a variety of engines. Check out the blog post for more details.

Management Tools

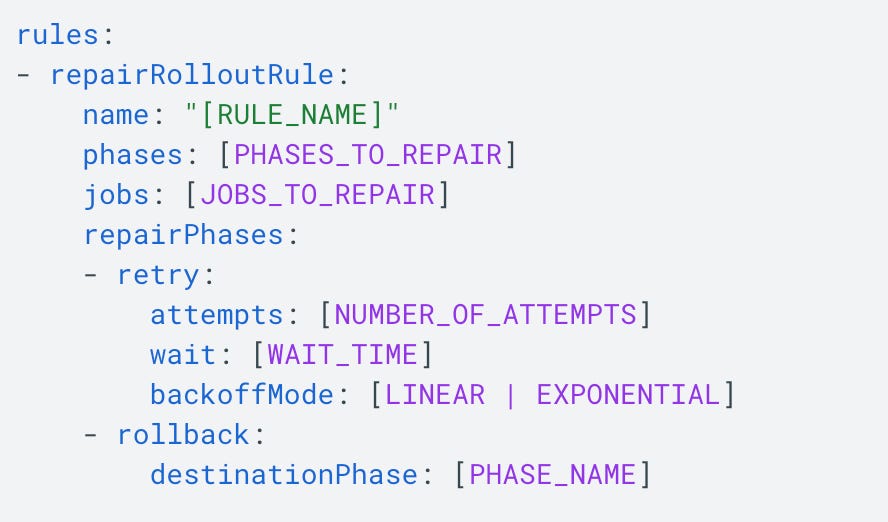

No organization is the same when it comes to safely rolling out deployments. With constant feedback from customers, Cloud Deploy has introduced (in preview) 3 additional features when it comes to safer deployments:

A new repair rollout automation rule lets you retry failed deployments for a specific number of times or automatically roll back to a previously successful release when an error occurs.

Prevent Cloud Deploy rollouts to a target during a specified time, and possibly overriding that too. For e.g. no deployments during this demo to an important customer.

New timed promotion automation, that lets you schedule when to promote a release. For e.g. Wednesday morning.

Check out the blog post.

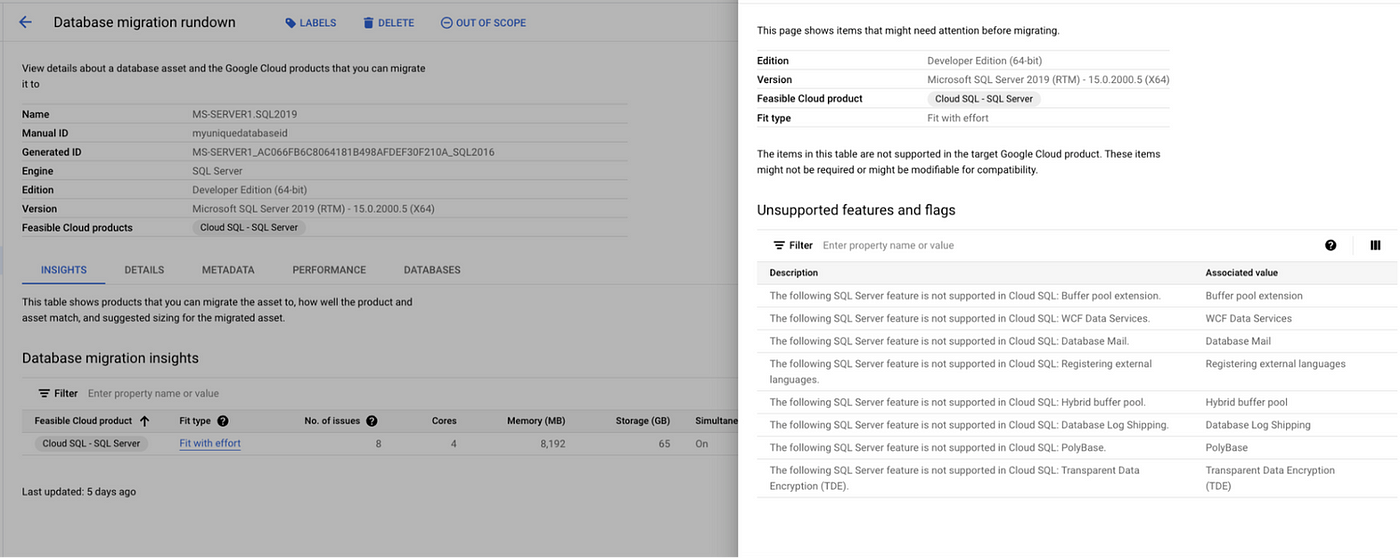

Learn about Migration Center

One of the key services expected from any Cloud provider is to provide assessment tools that help organization decide on migrating their applications / databases to the cloud. The tools can provide initial assessment, cost estimations, best practices to migrate, migration software and evaluating their performance. Google Cloud provides Google Cloud Migration Center, a unified platform to help accelerate migration from current on-premises or cloud environments to Google Cloud. This blog post highlights how you can assess databases for the possibility of migrating them to Google Cloud. It highlights 3 key areas: Discovery, Technical fit assessment and cost estimate.

Write for Google Cloud Medium publication

If you would like to share your Google Cloud expertise with your fellow practitioners, consider becoming an author for Google Cloud Medium publication. Reach out to me via comments and/or on LinkedIn and I’ll be happy to add you as a writer.

Stay in Touch

Have questions, comments, or other feedback on this newsletter? Please send Feedback.

If any of your peers are interested in receiving this newsletter, send them the Subscribe link.