Google Cloud Platform Technology Nuggets - January 16-31, 2026

Welcome to the January 16–31, 2026 edition of Google Cloud Platform Technology Nuggets. The nuggets are also available on YouTube.

AI and Machine Learning

January saw several announcements in this area. If you’d like to stay in the loop, bookmark the link “What Google Cloud announced in AI this month”. The announcements include Veo 3.1 enhancements, Universal Commerce Protocol (UCP) release and more.

Data Analytics

If you are looking to bookmark just one link to keep yourself informed about all the happenings in Google Data Cloud, then check out the link “Whats New with Google Data Cloud”.

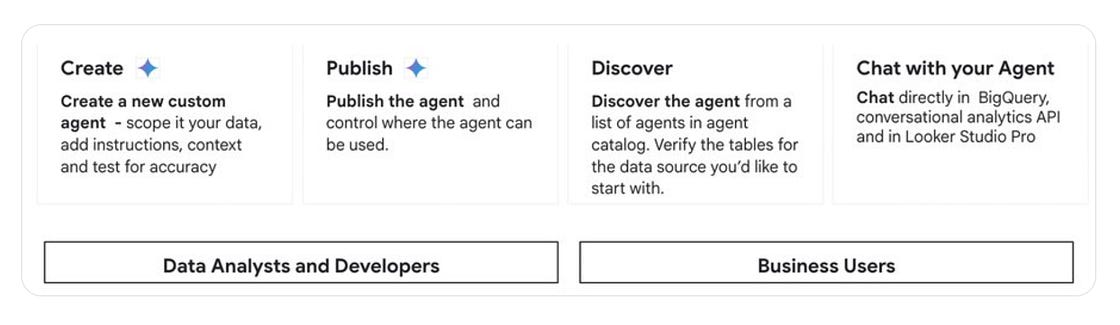

It does seem that we are moving at high speed towards a scenario where SQL might not be required for several data analytics work that requires you to query your datasets. Enter Conversational Analytics in BigQuery, currently in preview. This feature adds an intelligent agent to BigQuery Studio, where you can ask questions in natural language and get answers grounded in your specific business metadata, effectively generating the SQL and visualizations for you. Check out the blog post for more details on the feature.

Dataflow, a key component of Google Cloud’s AI stack that lets you create batch and streaming pipelines that support a variety of analytics and AI use cases, has got key updates that target choice and efficiency while running those workloads:

More hardware availability : Support for H100 and H100 Mega GPUs and Next-Gen TPUs (TPU V5E, V5P, and V6E).

Reservations: Users can now reserve GPUs and TPUs specifically for Dataflow jobs to ensure resource availability for critical tasks.

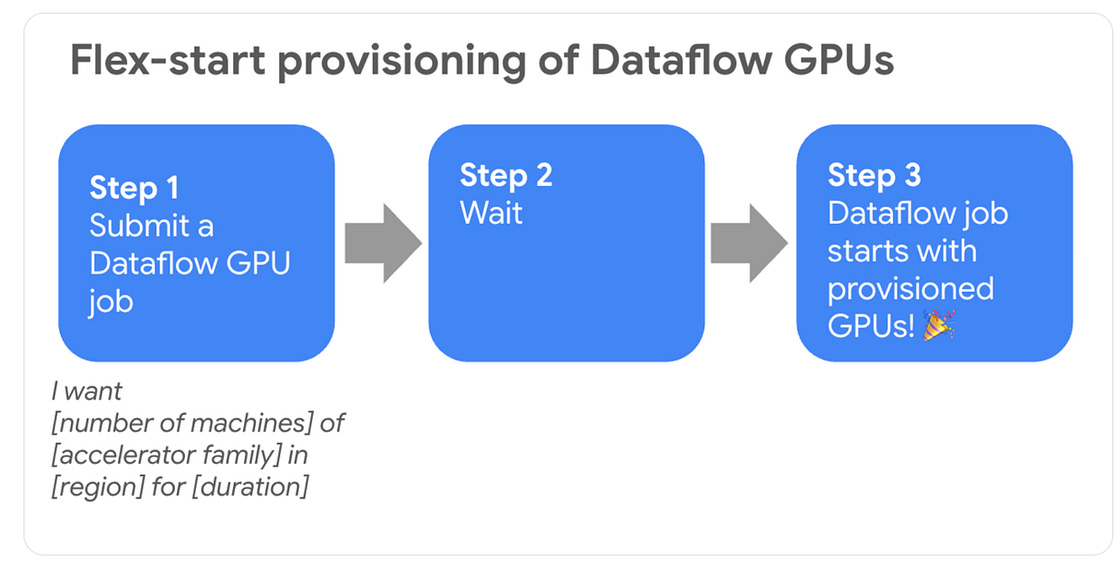

Flex-start Provisioning: Utilizing the Dynamic Workload Scheduler, Dataflow can now queue jobs and automatically start them when GPUs become available, rather than failing the job immediately.

ML-aware Streaming: Support for GPU-based autoscaling, that monitors GPU signals and parallelism to scale streaming jobs more intelligently.

Right Fitting: Users can assign expensive GPUs or high-memory machines to compute-heavy stages while using standard workers for simpler tasks, significantly reducing costs.

Check out the blog post for more details.

BigQuery has doubled down on Gemini 3 and Vertex AI integrations into its product, thereby providing a wide range of functionality now for those working with BigQuery AI. This includes:

Gemini 3.0 Pro/Flash support

Gemini 3, Simplified permission setup by using End User Credentials (EUC)

AI.generate() function for both text and structured data generation

AI.embed() function for embedding generation

AI.similarity() for computing semantic similarity scores between text and images

Check out the blog post.

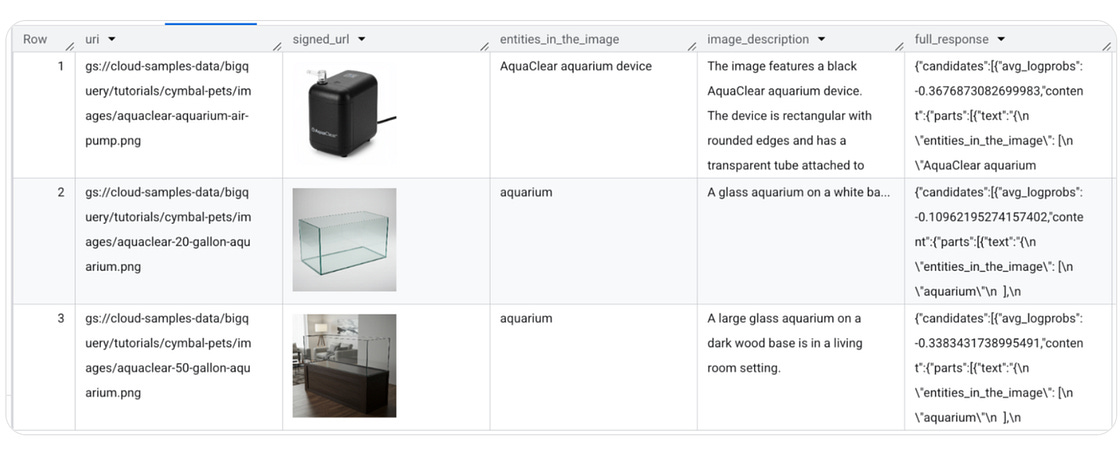

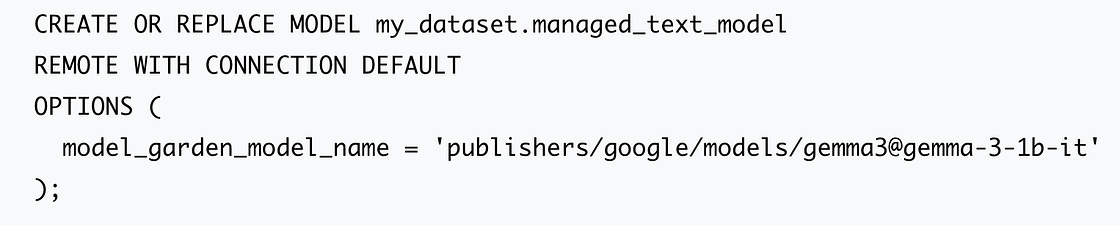

Continuing on the BigQuery features, you can now do your inferencing on open models (like Gemma) directly in BigQuery using SQL-native commands. It provides a managed infrastructure so you don’t have to provision GPUs manually just to run a quick inference job. Check out the post.

BigQuery AI Hackathon results are out. Take a look at the winners. The post also contains a good roundup of recently released AI features in BigQuery.

Databases

If you are into Spanner, here is a great summary of Spanner updates in 2025. These updates range from AI capabilities, Spanner Graph updates to several migration, cost and efficiency improvements.

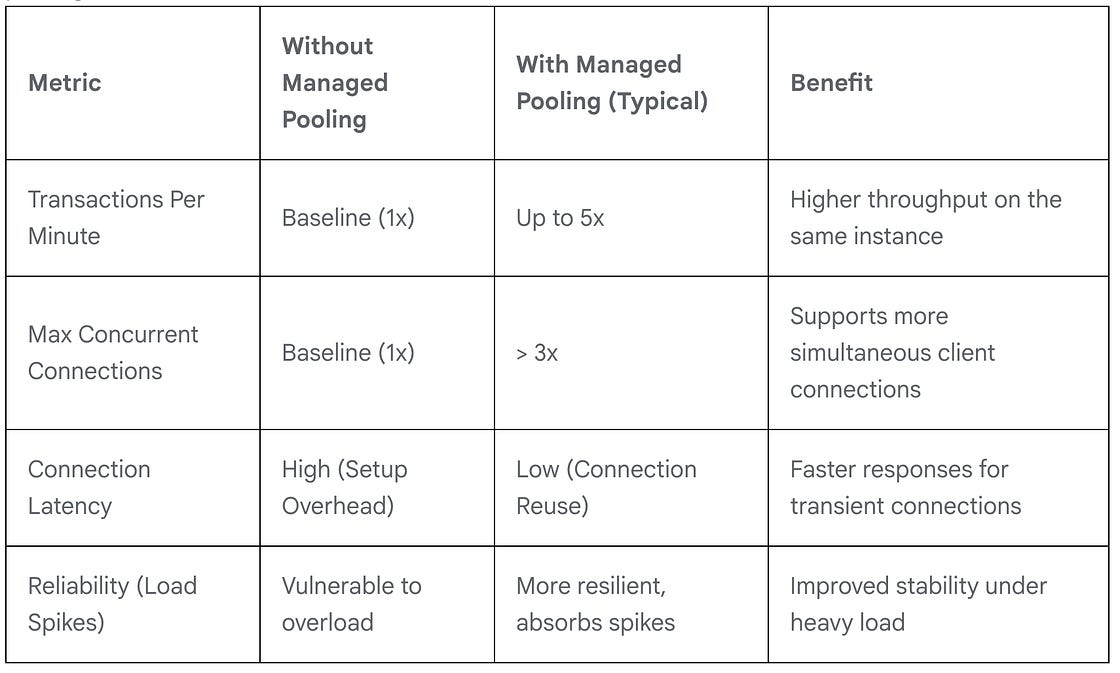

AlloyDB now offers built-in managed connection pooling. The blog post dives into the costs of managing connection pooling on your own and what the built-in managed connection pooling brings to the table. Check out the post and getting started instructions.

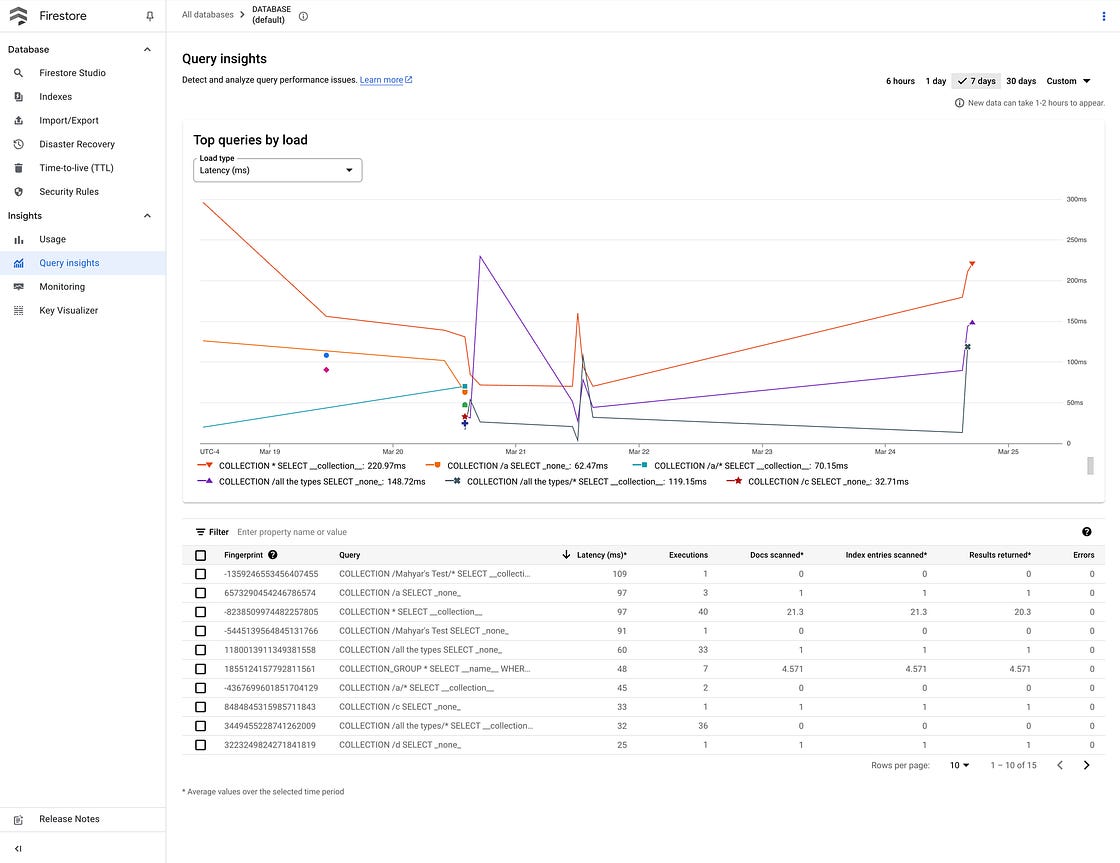

Google Cloud has introduced an advanced query engine for Firestore Enterprise edition that significantly enhances data processing capabilities through “pipeline operations.” This update moves beyond Firestore’s traditional reliance on automatic indexing while reducing operational overhead. Some of the key features include:

Over 100 new capabilities to chain stages for complex tasks like grouping, filtering, and multi-dimensional aggregations directly in the database.

Queries can now run without mandatory indexes, giving developers full autonomy over when to create them to optimize performance.

New “query explain” and “query insights” tools to profile execution paths, monitor latency, and identify where indexing is needed.

Check out the blog post for a full set of features announced.

Containers & Kubernetes

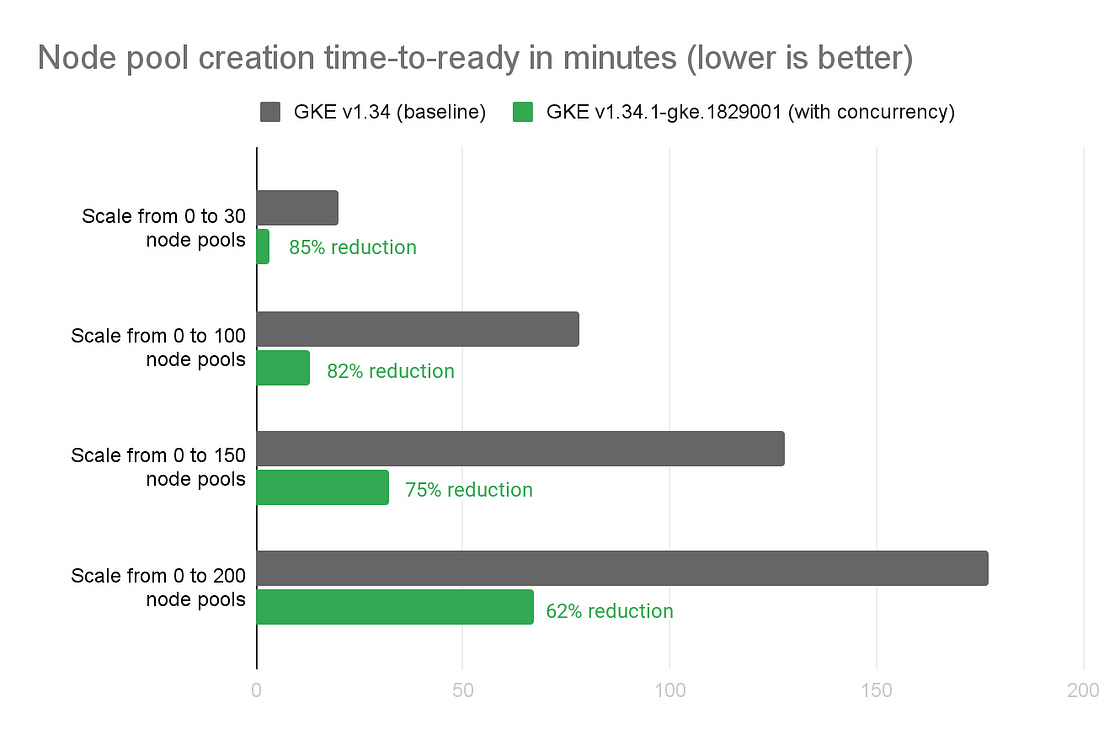

New enhancements for GKE Autopilot have been announced. The key feature is concurrency support in Google Kubernetes Engine (GKE) node pool auto-creation, to significantly reduce provisioning latency and autoscaling performance. Check out the blog post to understand why this matters, the overall solution and the performance gains obtained.

Identity & Security

This edition sees two Cloud CISO Perspectives for January 2026 being released. The first one, provides practical guidance building with SAIF (Secure AI Framework), focusing on how to secure AI workloads. The guidance looks at treating Dat as the new perimeter, prompts should be treated as code, and secure agentic AI requires identity propagation.

The second instalment focuses on top 5 priorities for 2026, that includes aligning compliance with resilience, securing the AI supply chain (SLSA/SBOM), and managing identity in an agentic world.

Compute

A new Google Cloud region has launched in Bangkok, Thailand. If you have users in the region, do consider running latency tests. And like always, understand the set of services that are available in the new region. For more news on the announcement, check out the blog post and do look at the full list of Google Cloud regions.

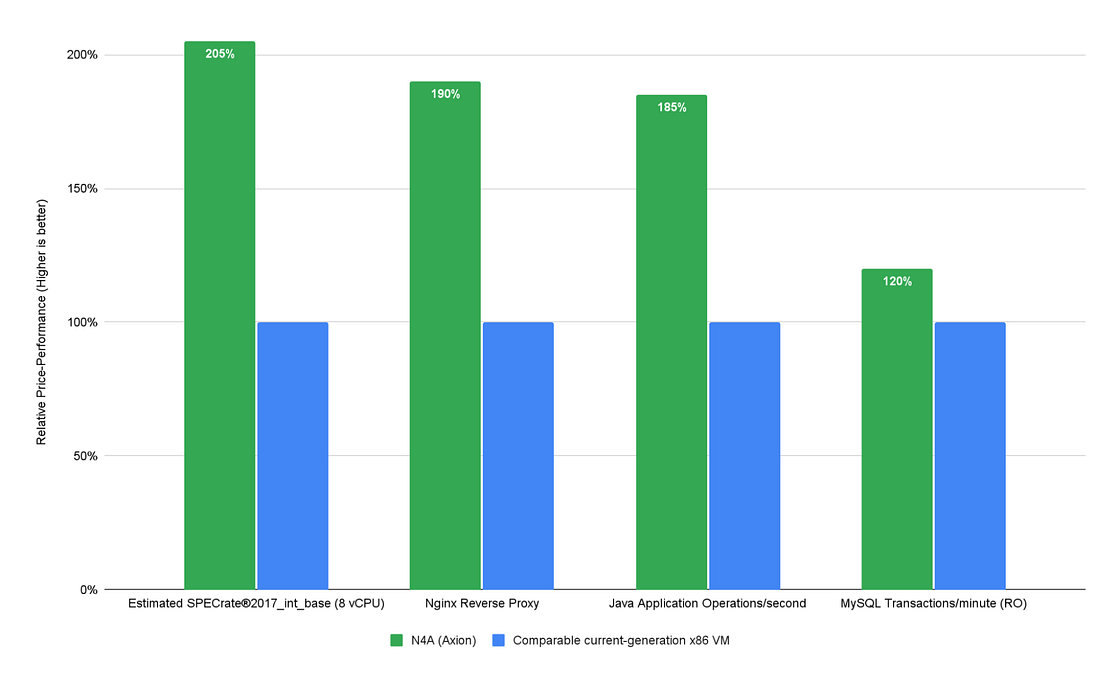

Google’s custom ARM-based Axion processors now power the N4A machine series, which is generally available. They deliver up to 2x better price-performance compared to x86 equivalents. ARM in the data center is a key optimization strategy and forscale-out web tiers or containerized microservices, you should look at evaluating it. Check out the blog post.

Developers & Practitioners

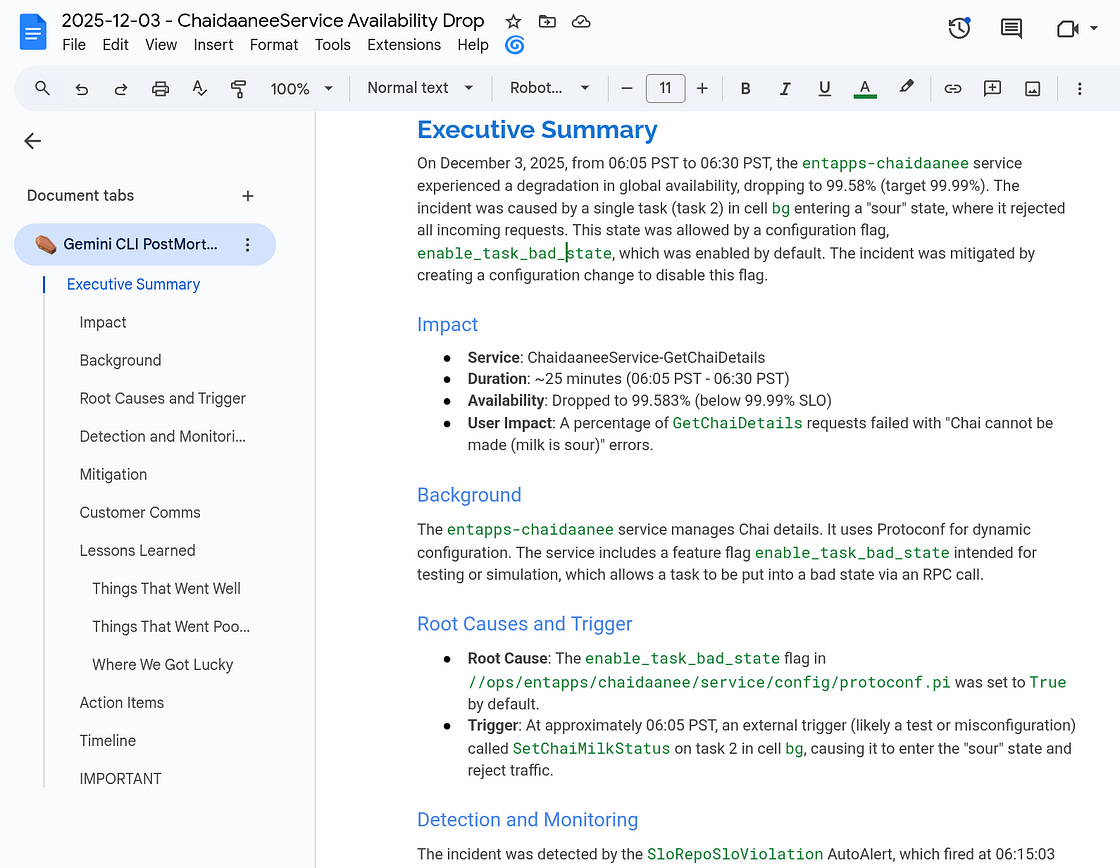

Google Site Reliability Engineers (SREs) are actively employing AI to reduce the manual work (Toil) and fix problems faster. Check out this blog post that highlights a sample incident and a “4-Step Incident Workflow” and how Gemini CLI helps at each stage:

Paging and Investigation

Mitigation

Root Cause and Fix

The Postmortem

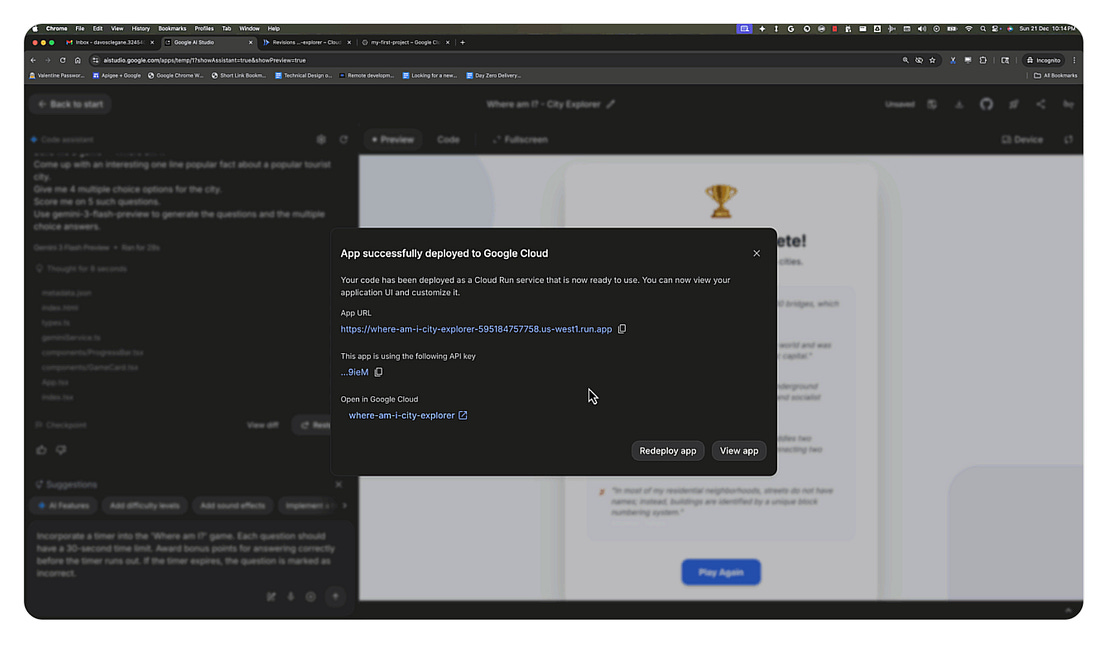

Gemini 3 is a great model and builders have been busy vibe coding applications. But often when it comes to the next step, the process abruptly stops, because to deploy an application to the cloud, you need to understand billing, cloud and more. Well, help is on the way and in this two-part series, you will get to not use understand how to start building your own application using Gemini but in the 2nd part, via AIStudio, the article demonstrates how you can use “one-click” to deploy your AI applications to Google Cloud Run. Check out the articles here:

Part 1 : Getting Started with Gemini 3: Hello World with Gemini 3 Flash

Part 2 : Getting Started with Gemini 3: Deploy Your First Gemini 3 App to Google Cloud Run

Looking for a full-stack architecture using Dart and Flutter to build and deploy applications on Cloud Run? Check out this guide that dives into this scenario highlighting using a single language across the entire stack, using a shared package to share data models and business logic. The workflow is streamlined using Dart Workspaces for monorepo management and GitHub Actions for automated CI/CD.

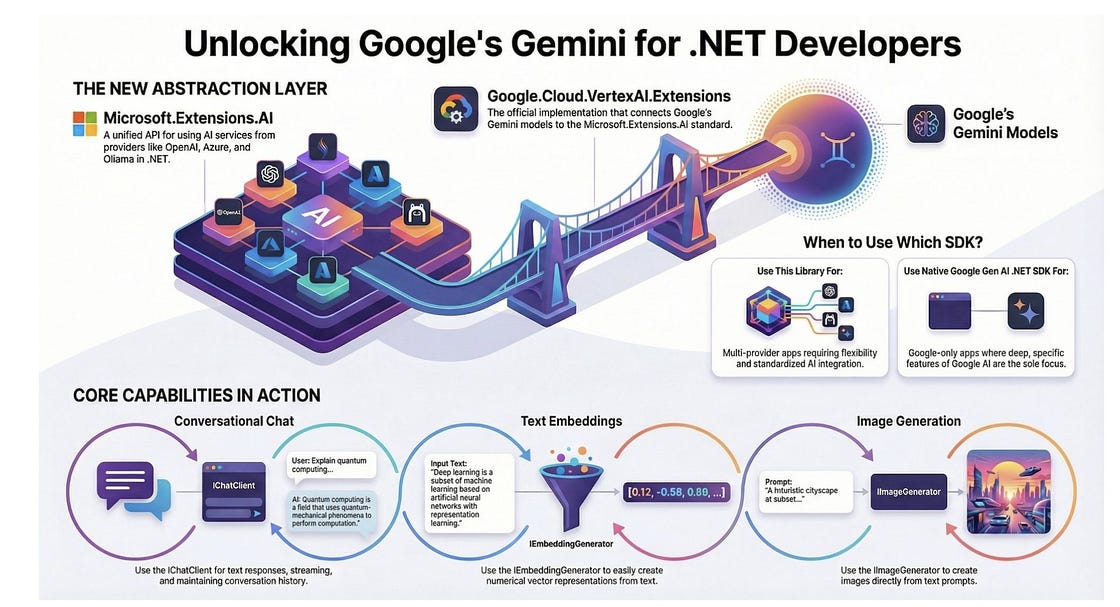

Google has introduced Google.Cloud.VertexAI.Extensions, a new library currently in pre-release that enables .NET developers to integrate Google’s Gemini models on Vertex AI using the standardized Microsoft.Extensions.AI abstractions. Check out the blog post.

Learning Center

If you are looking for a curated learning path to take your AI projects from prototype to production, check out the Production-Ready AI with Google Cloud Learning Path. The series is free and I suggest to bookmark it in case there are any updates.

If you are a developer tht loves being in the terminal, Gemini CLI is a great tool. Here is a free, two-hour course titled Gemini CLI: Code & Create with an Open-Source Agent, developed by Google Cloud in partnership with DeepLearning.ai.

The curriculum covers installation, context management using GEMINI.md, and extending functionality via Model Context Protocol (MCP) servers. It highlights practical workflows for software development, data analysis, and automated content creation, aiming to help both developers and non-developers integrate the Gemini CLI into their daily tasks.

Write for Google Cloud Medium publication

If you would like to share your Google Cloud expertise with your fellow practitioners, consider becoming an author for Google Cloud Medium publication. Reach out to me via comments and/or fill out this form and I’ll be happy to add you as a writer.

Stay in Touch

Have questions, comments, or other feedback on this newsletter? Please send Feedback.

If any of your peers are interested in receiving this newsletter, send them the Subscribe link.